What is morality? What is rationality?

What to expect

The overwhelming majority of the time we act on auto-pilot. We run through the motions without having to think about what to do next. But the world isn't simple, not for us at least. Often times we are kicked out of auto-pilot and are faced with dilemmas: what do I do next? In moments like that, we are forced to think through various considerations. But how? That's precisely what I want to lay out in this chapter: I want to give you all the conceptual scaffolding for thinking through actions, for thinking about what's moral, and what's rational. These concepts have served me well and I'm confident they will serve you just as well.

Let's first recall where we are in our journey for understanding how to act. The starting point for this journey was laid out in chapter 1 where we established that the First Question for any introspecting mind is “what should I do?” That’s our entry point. Key in our search is a deeper understanding of the word “should”. But first we need to agree on what we mean by certain conceptual tools lest we talk past each other for the rest of this book.

We also established in the chapter 2 exactly how not to understand normative words: by trying to capture precise definitions of how these fuzzy words are used in practice, a futile endeavor. So in this chapter I’m not only going to answer the First Question, but lay out a framework which gives the foundation for answering any normative question about actions.

How to conceptually engineer

Precise definitions of words so deeply intertwined with our daily functioning isn’t the path forward. What we can do is ask some useful questions which get us close enough to the standard working definition of a word, and then define it as such. My usage might differ in some edge cases compared to others, but that’s okay as long as I’m clear from the outset. The goal here, and in subsequent pieces, is to define key normative terms such that their totality results in a worldview, a lens through which to view ourselves and the world that makes it more legible. If the summation of my conceptual carving up of the world is that things appear more clear when we talk of normativity, I’ll consider my aim achieved.

One particularly useful question to ask about a word in order to better understand its function, or use and therefore meaning is to ask, “what do we expect to follow from this word’s utterance?” More specific to our use case, when we put the word “should” into the form of the First Question, what kind of an answer do we expect? Whenever we ask “what should I do?” we’re expecting this question to yield a certain kind of answer. But what kind of answer?

If a distraught friend approaches you, explains his predicament and asks, “what should I do?”, after poking and prodding a bit, what type of answer do you think he ultimately wants? What they want (aside from perhaps first some emotional support) is a prescription: “Do X because of Y." There’s an expectation that a command and reasons will follow your friend’s question. This is what work “should” elicits in the First Question.

That’s a big insight! Not only do we know how the word “should” is used, but we also know the shape the answer to the First Question will take! Two birds with one stone. The answer to the First Question for any introspective mind is a prescription. What can we do with this?

If we focus on prescriptions a good starting place is to look at theories which output prescriptions. Moral theory is the obvious first choice. But in order to understand morality, we first need to understand rationality more broadly.

Normativity

Whenever we say things like:

A C major chord sounds better here.

I prefer Chinese food to Thai food.

Given the facts, Adam violated the law.

there’s an implicit “should” hiding in there. It’s implied that:

We should play a C major chord.

We should order Chinese right now.

We should prosecute Adam.

The implication that a “should” is hiding in plain sight can be inferred from a normative term like “better” or “prefer.” They are inextricably linked. The former implies the latter. Other times the implication is simply understood between the speakers as in the case of Adam. That’s clear enough, but how do we even begin to define such a foundational word?

The key is, we don’t have to! As I said in chapter 2, I won’t try to give you some precise definition with necessary and sufficient conditions which outline when and where the word “should'' makes linguistic sense to use. That level of precision for human language is not only unnecessary, but ultimately contrary to how language is actually used. Language is fuzzy, and it should be: it allows it to adapt to an ever changing world, to morph the confines of applicability as the environment we operate in shifts. Normative language is even fuzzier. It's changes dramatically over our history as Nietzsche excitedly pointed out. We use heuristics and intuitions to determine when and where to use a given word, including the word “should”. But we still want to somehow clarify the word “should”. What to do?

Thankfully, there is a pragmatic way of understanding the word “should” without falling into the same trap outlined in the chapter 2, and without arbitrarily defining this key concept.

What is morality?

The answer of course depends on the function you think morality or ethics (I’ll use these terms interchangeably) serves. Morality seems to be a process for answering the question “what should I do?” by providing not only judgment (this is right, this is good) but crucially prescriptions (do what’s right, maximize what’s good). But morality isn’t the only class of normative theories which provide prescriptions. Practical rationality also aims to give prescriptions.. So what’s the difference?

We can use J.L Mackie’s understanding of morality as a close enough sketch of how we often think about morality in everyday language, post-Enlightenment (we'll talk mora bout pre-Enlightenment morality when we get to virtue):

“Ordinary moral judgements include a claim to objectivity, an assumption that there are objective values… I do not think it is going too far to say that this assumption has been incorporated in the basic, conventional meanings of moral terms.”

In short, moral judgements like “this is wrong” or “that’s immoral” often carry an air of objectivity about them. At least, that’s how we typically talk about morality, even though if pressed, many will admit to being moral relativists. We still use moral language as if there were some objective standard of ethics.

We can also understand Mackie in a more technical language. He equates morality as is conventionally understood to a series of categorical imperatives, as opposed to hypothetical imperatives. The difference between the two has to do with which terminal desires (or wants/ends/goals/aims, all used interchangeably for now), if any, we are considering. A hypothetical imperative takes the form:

Hypothetical Imperative: If you desire X, do Y

Contrast this with a categorical imperative which holds:

Categorical Imperative: Do Y, regardless of what you desire

But ethics, for many, goes beyond this. Peter Singer writes:

“A distinguishing feature of ethics is that ethical judgements are universalizable. Ethics requires us to go beyond our own personal point of view to a standpoint like that of the impartial spectator.”

In other words, ethics is about doing what’s right, not from your perspective but from “the perspective of the universe” in some sense. We usually wouldn’t call doing something which most benefits yourself, but is detrimental to society at large a “moral” decision. We might call it “rational” in some sense, or “egotistical”, but certainly not moral.

This seems right to me. And even if this isn’t how everyone always uses moral language, for the sake of simplicity we’ll define morality to be characterized this way. (“Moral theories” which don’t fall into this box will fall into the practical rationality box anyways so nothing is lost.) Morality includes these two features:

Objective

Universalizable

So the prescriptions of morality are given regardless of the desires you hold, and are determined by taking a “universal” viewpoint. Utilitarians take the “standpoint of the universe” by counting up everyone’s well being equally. Kantians say the one true Categorical Imperative asks us to consider our action applied universally, independent of our personal desires, to see if it yields contradictions (e.g. universalizing stealing would render private property null, and therefore stealing, the concept of taking private property, would no longer make sense). Contractualists might ask us to consider which rules would be best for each adopted if everyone adopted them. Virtue ethicists, by contrast, separate from the pack by not requiring universalization of anything and instead fall back on optimizing for excellence, flourishing, or individual happiness — a theory better categorized as practical rationality, defined below. So morality thus understood can be contrasted with systems of practical rationality.

The most famous form of practical rationality is Humean rationality. This is a strictly means-ends style of thinking. It's made up entirely of hypothetical imperatives. The desires plugged into the system can be arbitrary like the rules of soccer, etiquette, laws, etc. They can also be more universal desire to be self-interested ( i) happiness, ii) desire fulfillment, or iii) some objective list of desires or values (Parfit, 1984, §3)).

It's important to note that rationality is not strictly equal to egoism. Philippa Foot points out that practical rationality is more broad than simply self-interest. If you respond to, or are at least partially compelled by, a (presumably coherent) reason for action, then you are acting practically rational. Hume would agree. This reason doesn’t have to be a self-interested reason. This reason can be an appeal to your sense of justice, to your sense of beauty, to your sense of morality even. What ultimately matters here is that reasons for acting compel you, that you’re subject to rational persuasion. So any system of action guidance which appeals to coherent reasons for acting can be considered a system of practical rationality. (Philippa Foot, Natural Goodness, 13)

(It’s at this point that some might claim that all reasons for acting are necessarily self-interested because we’re selfish creatures. This isn’t a line of thought I want to spend much time on as there are strong evolutionary reasons for humans, one of the most pro-social animals, to be hard wired to truly care about others. Was this better for our long term survival? Yes, but you and I are not our species. We are not the collective to which we pertain, and being the result of strategies that aim for the “selfish” survival of the collective does not mean that the resultant individuals must also be selfish. It’s simply a better strategy to program us to give a damn about others. We are mesa-optimizers after all. First-person experience also closely matches this. Finally, casting all desire fulfillment as “selfish” simply isn’t what we mean by the word. If we donate to charity because it gives us the warm fuzzies, not many would call that a selfish act, and neither do I.)

Defenders of morality (circularly, see next chapter) claim that moral reasons for acting take priority. If considerations of prudence (self-interest) conflict with considerations of benevolence (morality), then we must defer to the latter. Practical rationality, by contrast, holds that moral reasons are simple one among many reasons that count in favor of an action. This of course makes practical rationality somewhat subjective, as we don’t all respond equally to the same reasons for acting.

Keeping with the model of morality outlined above, both broad normativity and practical rationality share two fundamental features. Both are:

Subjective

Particular

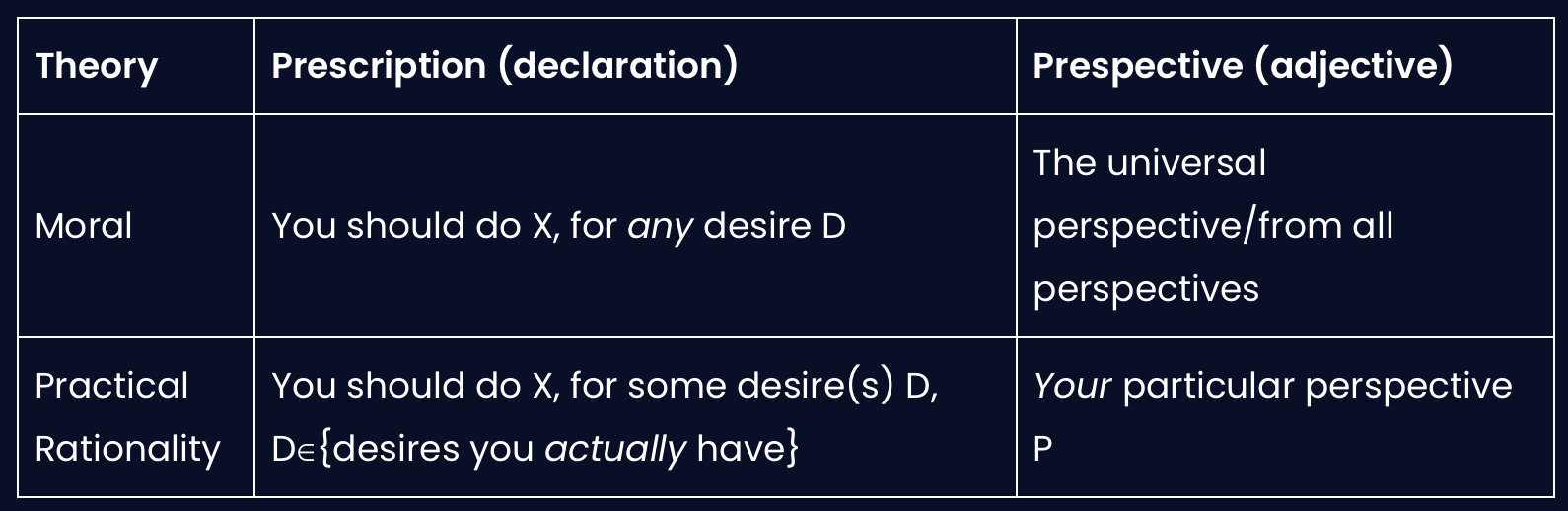

Casting both sets of normative theories in terms of desires we get the following:

Final notes on normative theories

I put the parts of speech in this table to highlight the linguistic nature of all normative theories. A normative theory is primarily conceptual. Given certain information (verbal, conceptual, experiential) it outputs certain linguistic features. But not just any linguistic features, these are linguistic features as tools for decision making. The goal is that by knowing, say, this action is something my internalized moral theory classifies as “wrong” (most often in the form of an intuition or feeling), then I know not to do that action. But note these theories don’t have to be thought of explicitly. These moral theories are simply the formalization of certain thought-patterns, intuitions, feelings, in a way that can be analyzed in a more effective manner (by thinking, talking or writing about them). This is similar to the mechanism at play when mathematicians write out symbols on paper: it’s to get the ideas clear and out of our head so we can preoccupy our thoughts with more interesting ideas.

A final note about these normative theories. Normative theories take in the output of our various descriptive models of the world. In all normative theories (worth considering) what one should do of course relies on what in fact a) is the case and b) would be the case under various circumstances. How each theory decides to weigh up those facts, and those probability estimates is specific to that theory.

So what?

Now that we know what we're talking about when we talk about morality, and practical rationality we can finally start to answer the First Question. We'll do this in the next chapter by thinking through the question using Hume's Is/Ought frame which will ultimately show, morality has no legs to stand on.